As we all know, object detection is the task of detecting objects in an image in the form of a bounding box. What if we wanted to get a more accurate information about the object? You’d go for more than a rectangle (bounding box), maybe a polygon which represents the object more tightly. But that’s still not the best way. The best way would be to assign each pixel inside the bounding box which actually has the object. This task is called as Instance segmentation, where you segment the object instances.

Why is this really needed? Imagine in a world where UAVs and Aerial Imagery is used to capture minuscule data snippets to give intelligence that can be consumed for various purposes. In a scenario like above there can be drones employed to survey minute detailing and inspection to monitor furniture repository across a large warehouse.

“We live in the era of Digital and the use of Drones and UAVs have effectively enhanced the possibilities of amalgamating AI to detect and accurately identify objects in large Retail Marts”

You absolutely need to know exactly what the coordinates of the objects detected can entail, and derive many pre-emptive insights to utilize the intelligence for the betterment and management of asset intensive goods repository, where just detecting an object may not specifically be enough, but more precision can derive more insights in terms of the asset manageability and supervision. That’s what we will be dwelling into in the subsequent write ups to see how such an extended form of Computer Vision can be consumed in a Furniture Retail Mart for example.

To accomplish such a feat, we will be primarily looking at extensively using VGG Image Annotator (VIA) techniques merged with CNN Deep Learning Libraries, train and test the data models and test the output across a set of Furniture based imagery.

This is again another dimension of Computer Vision principle within the periphery of Artificial Intelligence.

To enable an Image Instance Segmentation ecosystem, would involve a Six Steps process, invoking libraries and extension with a customised code developed to actualise the boundaries of an Image Segmented beyond a Box Size or a Polygon Size etc. and mapping boundaries that can match up the shape of the object in question. To do that, we would be using VGG Image Annotator (VIA) and Google Co-Lab Libraries as a stack, for this pilot implementation.

As a first step however we need to look at creating a Cloning Repository of Images to be tested. Once this is done, we then look at preparing the Image Data to be used for training and testing. In order to this, we use VGG Image Annotator (VIA) to run the stream of image files.

In order to train the image models, we then look to use Google Co-Labs libraries, upload the VIA enabled Images and the consume the google based available libraries to start training the data models.

There will be a requirement of modifying the code depending on the objects you wish to have instance segmented, and you can look to manage those on the co-lab browser itself.

Google Colab has a repository of libraries around Keras, TensorFlow, PyTorch, and OpenCV that can be easily used and modified based on need, to look at building Deep Learning based applications, Image Instance Segmentation being one of the forms.

Once the model is trained, you can look to test the set of images and verify image instance segmented output and measure accuracy.

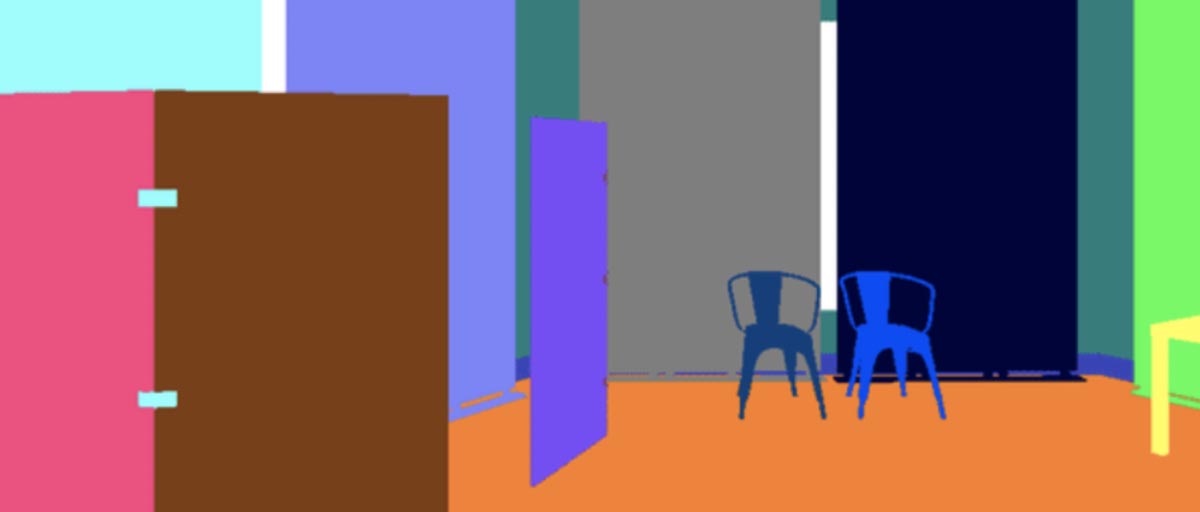

Typical Output looks like the following:

As discussed, a combination of AI layered with Deep Learning usecase that can look to go a step beyond just Object Detection, and can create highly sophisticated insights especially from an aerial imagery perspective.

Overall as a part of the above usecase we predominantly figured out another way to precisely conclude and detect objects using the VIA and DL techniques overall a generic Object Detection CNN models.

For this particular usecase we used VGG Image Annotator (VIA) technique merged with DL libraries on Google Colab tool to precisely train and execute an image instance segmentation prototype for a Furniture Retail Mart.

The instance segmentation problem intends to precisely detect and delineate objects in images. Most of the current solutions rely on deep convolutional neural networks but despite this fact proposed solutions are very diverse. Some solutions approach the problem as a network problem, where they use several networks or specialize a single network to solve several tasks.

A different approach tries to solve the problem as an annotation problem, where the instance information is encoded in a mathematical representation. This work proposes a solution based in the DCME technique to solve the instance segmentation with a single segmentation network.

Different from others, the segmentation network decoder is not specialized in a multi-task network. Instead, the network encoder is repurposed to classify image objects, reducing the computational cost of the solution.

Especially in the case of Aerial Imagery, this VIA enabled technique essentially helps to dig deeper insights compared to a generic object detection modeling, and thus uses a peripheral diametry over a more familiar Box/Polygon diametry approach followed by generic Object Detection libraries.

The peripheral diametry of individual objects identified in an image provides greater precision, and hence this method is an additional boon to the Computer Vision purview of possibilities.